Migrating from BitWarden to 1Password

I’ve been using Bitwarden for a year or two now, after switching from 1Password when they opted to add some dumb crypto nonsense to the app. It works really well and I don’t really have any major complaints about it - the UI’s not all that great and I miss the additional record types that 1Password had, but it has a Linux client and it’s open-source and I can run the cloud portion of it myself. But, I kept seeing the new stuff that the Agile Bits team was doing and, well, FOMO, so I decided to switch back to 1Password to see what the deal was. Doing that required me to move my stuff back from Bitwarden to 1Password.

The easiest-slash-obvious way to do this is to use the native 1Password importer, which will take a CSV formatted file and allow you to match the fields in the file to what’s available in 1P itself. This is a great approach but it seemed like a lot of work for my use case. (I migrated my 1P data into Bitwarden, so I have something like 12 years of data? It’s a lot.) But, this is probably the easiest way to do this if your Bitwarden vault is smaller or better organized (and especially if you don’t keep your credit card info in it or use secure notes).

The more complex method is to try to figure out some way to convert the Bitwarden vault export into a 1Password-compatible file (either a 1pif or a PUC file). Bitwarden will export in both CSV and JSON formats and the JSON format is very much the best way to go. Agile Bits doesn’t document the format of their interchange formats. At this point, my thought was to write up some quick Python to split out the Bitwarden JSON export into a few export CSVs so I could re-import them into 1Password using the CSV importer.

Fortunately, there was another way. When digging for a format for the 1pif format, I found this post on the 1Password community site which pointed me to a suite of converters that were written by the poster there, MrC: Moving to 1Password from another password manager This actually does everything necessary.

The MrC Converter Suite is a Perl app that includes a ton of conversion modules for various different password managers, and Bitwarden is among them. It works with the JSON export too, which is the best way to do it as it allows it to work natively with the different types of record and the varying fields that are present in each record. Finally, it can export that data into either the PUC or the 1pif foramts. It being a Perl app makes it multiplatform too (though the included instructions only support native Windows and macOS). I found the docs to be pretty good and the code is pretty readable, which is amazing for Perl. (Only sort of joking - I used to write a lot of Perl around the turn of the millennium and there are still scars.)

In my case, I didn’t want to install Perl on my Windows machine (and I was feeling lazy and didn’t want to do this on the Mac in another room, because I’d have to get up), so I did this on WSL using a Ubuntu 22.04 install. I needed to install a bunch of packages, which I opted to do via apt rather than try to wrangle CPAN into installing it. (Some of them failed installing in CPAN, and by that I mean they failed to compile things, and I had already gotten super tired of waiting for the compile for XML::XPath. It took forever on a fast machine.) These are the packages I installed:

1 | sudo apt install libdate-calc-perl libarchive-zip-perl libxml-xpath-perl |

There were a couple others I searched for but they ended up being installed - just searching for the CPAN module name worked fine. These were archive::zip and bit:vector.

After doing this, I ran the conversion utility: perl convert.pl -v bitwarden <file>. This failed - not sure but something about my input file broke it and I kept getting duplicate key errors. The fix for this was to specify the 1pif output format, rather than the default PUC file. So, the command was perl convert.pl -v -F 1pif bitwarden <filename> -o <outpufile> (by default it’ll try to put your output file in ~/Desktop, which I didn’t have).

The other problem was importing the file. The latest version of 1Password actually doesn’t support importing their own files. Or, rather, it doesn’t support importing their 1pif format ones. So, I installed 1Password 7, which is still handily available on the website under the ‘Using an older computer?’ heading at the bottom. I’ll upgrade to 8 later. I think 8 was part of the reason I was also sort of annoyed with 1Password too so maybe I won’t do that, actually.. Anyway, once you have 1Password 7 installed, you can import the file. I opted to do this into a fresh vault. Everything seemed to work fine, though the create and modify dates all got trashed. That’s a minor thing, though. Importantly, my credit card info is in there right, the secure notes all look good, and the actual passwords are all in the right spot. My tags seem to work too, including my all important Security Questions one. (Because security questions are terrible and bad, I just generate passwords for ‘em.)

If I’m being honest, one thing I don’t like about this whole process is that the conversion suite isn’t somewhere more visible. It’d be nice if it were up on GitHub or something. Maybe I can use it as inspiration (or at least a spec) to make up some Python packages for the file formats.

So, this all worked fine, and now other than the logistics of installing it everywhere and updating browser plugins, I’m pretty much back to 1Password.

Stupid Computer Drag Racing

Two mini PCs, facing off against each other in a race that’s somewhat network dependent. What fun!

I got a couple of those weird mini NUC-style PCs. They’re very cosmetically similar PCs, of the “AK2” variety, that you can get on the Amazon for between $70 and $175 depending on what deals are going on and spec. They were bought for other things, but I figured why not see what the difference is between a couple of generations of Celeron?

Similar things on each: both have 2x HDMI ports, a smattering of USB 2 and 3 ports, RTL8111-family GbE network, onboard single-port SATA, AC wireless (one with an Intel card, one with a Realtek). The differences are memory, CPU, and storage (outside the SATA).

AK2: J3455 - Celeron J3455 Apollo Lake (4c4t), 6GB RAM, 64GB eMMC, no NVMe slot (there is an open slot but it’s Mini-PCI for some reason)

AK2 “Pro”: N5095 - Celeron N5095 Jasper Lake (4c4t), 12GB RAM, 256GB NVMe SSD (this came with an SSD that threw a ton of errors during Ubuntu installation.. swapped it for a known-good 256GB drive but not sure if that was just weirdness or that the pack-in drive is flaky)

To do the drag race, I set both of these up with Ubuntu Server 22.04 LTS with full updates, pyenv, and Docker Engine; and connected them to my network via Ethernet. The Ethernet connection is somewhat bottlenecked as I’m using the two Ethernet ports on the TP-Link Deco P9 mesh pod in the room where they are, and that’s generally using the slower HomePNA powerline backhaul to the rest of the netwrok. But, they ranged from 7-10MB/s when both were hitting the network simultaneously to about 15MB/s when one got full shouting rights over the cable, and they were run so they were both basically sharing space all the time.

The workload I chose was setting up an Open edX devstack instance on each from scratch. Open edX is a pretty big thing - a full “large and slow” setup ends up with 14 Docker containers - and there’s a smattering of compiling stuff and decompression and database ops and all that, so it seemed like a good fit. (Plus, I’m really familiar with it. The day job mostly entails writing software that interfaces with Open edX in some manner, so I’ve run it on much faster systems than these two.) However, it’s worth noting that some of these steps are very network bound, and those steps are noted as such. I did include the preliminary Python setup steps here too, so that’s a lot more compiling.

Here’s the results. The times listed are the Real time from time(1).

| J3455 | N5095 | ||

|---|---|---|---|

pyenv install 3.11.0 |

10m40s | 05m20s | |

pyenv virtualenv |

00m12s | 00m05s | |

make requirements |

01m35s | 01m09s | - this step is pretty network dependent |

make dev.clone.https |

04m56s | 05m00s | - this step is pretty much just network access (cloning GH repos) |

make dev.pull.l&s |

10m20s | 09m39s | - yup a lot more network, this time Docker stuff |

make dev.provision |

108m54s | 51m32s | - this one is not network |

Round 2: now with identical TeamGroup AX2 SATA SSDs (512GB) connected to onboard storage and fresh install of Ubuntu Server 22.04. Some of the network speeds went up here; the machines got kinda out of sync and so they had the network to themselves for a bit.

| J3455 | N5095 | ||

|---|---|---|---|

pyenv install 3.11.0 |

10m40s | 05m22s | |

pyenv virtualenv |

00m12s | 00m05s | |

make requirements |

03m35s | 01m11s | - this step is pretty network dependent |

make dev.clone.https |

04m04s | 06m33s | - this step is pretty much just network access (cloning GH repos) |

make dev.pull.l&s |

09m22s | 07m31s | - yup a lot more network, this time Docker stuff |

make dev.provision |

90m03s | 43m48s | - this one is not network |

The most telling of these is the first and last result - pyenv install 3.11.0 and make dev.provision are places where you can really tell what the difference a couple of generations of Intel architecture enhancement make. As a reminder, these two chips are about 5 years apart (Skylake to Ice Lake; 6th gen Core to 11th gen). Interestingly, the performance difference is about the same as the cost difference. The J3455 system was about $75 and the N5095 system was about $150.

Neither of these systems are particularly performant (and they’re probably gonna lose those 512GB SSDs) but they make good point of need systems for lower-end tasks. They’re pretty small - roughly 5in square and about 3in high. The J3455 is going to be a Home Assistant box because it’ll outperform the Raspberry Pi 3 that’s currently doing that task and it’ll fit nearly anywhere.

A couple weird hardware things I’ve noticed:

- They both have a USB-C under the lid. You can get power out of it, but it doesn’t seem to do anything. I plugged a drive into it and nothing.

- The J3455 has a micro SD card reader on the board (that evidently works). The N5095 one doesn’t.

- The J3455 has a mini PCI slot on it. I was thinking maybe I could put a M.2 2242 drive but nope! I suppose you could use it for a WwAN modem or something, though.. do they still make those in mini-PCI? I have a CDMA one floating around, I could try it to see if it works in the slot..

- If you get one and take it apart, be careful about the WiFi antennas. I disconnected one taking apart the J3455 unit and in the process of trying to wedge the connector underneath the plastic thing they glued down to the top of the WiFi module (to keep the antennas connected..) I really broke the other one. Surprisingly it still connects to my local network, but that may be a function of it being basically next to one of the mesh pods.

- I also learned that Realtek USB WiFi NICs are less than great for use in Linux.

Most of this was from some videos by Goodmonkey on YouTube. He had some better luck with the AK2/GK2 pricing than I did. (But I might also look at deploying these TP-Link Omada WiFi dingles..)

Mastodon Week 2

After another week of Mastodon instance running, I’ve learned a few more things. So, here they are.

Sizing: Sizing was a problem! Turned out this was due to some choices I’d made (which I’ll discuss later). By the end of things, I went from a giant Sidekiq job backlog to none at all, and from a 2 vCPU/4GB droplet to a 4 vCPU/8GB droplet. This is actually too big now but it’s going to stay that way until I get some actual monitoring going on the machine. DigitalOcean provides some but I’d like some better stuff.

Relays: I added like 7 relays to the server to help grab stuff for my federated timeline. Relays are sort of a garden hose of posts - if you’re connected to a relay, your posts get aggregated and resent by the relay to the other connected instances. For me, this ended up creating two problems.

- I had too many of them and that spawned a ton of jobs that my smaller droplet couldn’t keep up with. If you want to add a relay to your instance, maybe only do one or two. (And you’ll want to be very judicious about what relays you add.)

- As for the relays I’d added from this list that I’d mentioned in the previous post: yeah, don’t do that. If you add a relay, you need to go through the published connections list to see who’s connected to the relay first or you’re going to get a bunch of hate speech and shitposting.

To be honest, once you get started following folks and rolling through hashtags and stuff like that, your instance will find things on its own. If there ends up being some focused relay systems in the future for certain communities, then I can see that being a thing to join up with; otherwise it really does seem to take care of itself.

Upgrades: As I’d noted, the DO one-click installer rolled forward with version 3.1.3 of the Mastodon server software. That’s pretty old and I upgraded it to 4.0.0rc3. (Aside: it’s nice that I can do that! One of the benefits of running your own stack.) It was a pretty easy process. The Mastodon docs mostly cover it but this was the gist of what I ended up doing (note that this is for the DigitalOcean one-click installer environment, yours will be different if it’s not that):

- Stopped all Mastodon services.

- Install newer Ruby and Node.

rbenvis installed already, so you just use that to install 3.0.4 or newer. I added the NodeSource packages (see nodejs.org) to theaptsources list and installed Node 16 using that. - Follow the instructions in the tagged release.

I took a snapshot of my instance before starting. That took longer than the upgrade process. If you’re moving from an old version of Mastodon to a newer one and you’re out a few versions, you should go back and re-trace the release notes to see if anything special needs to be done - in my case, going from 3.1.3 to 4, the instructions for 4.0.0rc3 included all the extra steps. I would have had to do those steps even if I didn’t go to 4.0.0rc3 as they were recommended in verion 3.2 or something. (There’s probably a reason why they’re not, but I kinda think the steps attached to the 4.0.0rc3 release should probably be the steps to do going forward.)

Hashtag pinning and following: This is in 4.0 and it’s great. I heart it.

Anyway, that’s it for now. The machine is humming along nicely and as an added benefit I check Twitter far less often now. Don’t get me wrong, they’re still complimentary services (to me), but I’m enjoying being on the pachyderm site. Next thing on the list is adding some more instrumentation. You get some with DigitalOcean and I have it linked into Pulseway but it’d be nice to get some Prometheus/Grafana and/or Zabbix or something running too.

Riding a Mastodon

I rolled out a vanity Mastodon instance a week ago (as of writing this) so I could get in on the Fediverse experience and start distancing myself from the bird site. It’s been an interesting week as it goes, so here’s some notes I’ve collected.

Mastodon has a pretty distinct “feel” compared to Twitter.

Especially right now, going through Mastodon is a lot more like being new at a newish community meeting spot than anything else. There’s a lot of introductions and people trying to find their people and trying to figure out how to work things, and there’s a lot less people - it’s more like the party is just starting to crest over, compared to Twitter’s already in the deep end and getting deeper. It definitely still feels nicer and more civil, for now at least.

Visibility is a lot different.

I used the Fedifinder tool from @Luca@vis.social and that.. really didn’t help a whole lot! Turns out a lot of my followers don’t have the Mastodon handles in their bios, so it found like 5 (and hasn’t found any more since then). It may work better for you. Mostly, I’ve found people I’d like to continue following from Twitter by virtue of the fact that I’m following them on Twitter and they’ve posted their Mastodon handles there.

However, there’s some other things that I’ve been doing to find folks:

- Hashtags help a lot. Especially a few that are interesting - for me, that’s #introduction, #highered, #edtech, stuff like that.

I wish there was a way to follow these tags - none of the clients I’ve used so far seem to do that.Updated: Server v4 will do this!

- Looking through the instance list. I can find instances that are generally geared towards my interests and then go looking through their member directory to see if I can find folks to follow. This doesn’t work so much for the huge general purpose instances like mstdn or the official(?) Mastodon instance but for ones like the already mentioned vis.social or like mastodon.art, it can be pretty nice.

- Looking through other sites. For example, I’m on MetaFilter, so I added my Mastodon handle to my profile there and I can look up a list of other folks that have handles too.

- The federated timeline helps, of course, but I have more to say about that later.

But, there’s some caveats too. Because everything is spread out, it’s sometimes hard to see content. I’ve kinda blindly followed a few people because their bio is interesting or they’re part of another account, but I wasn’t able to see anything they’d posted because their last post was too old to hit whatever upper bound my instance or client has. It’s also sometimes kinda frustrating to really not have an effective firehose (but that’s also sorta nice too).

Choosing an instance to be on can be easy!

You can mostly just search around and find an instance that is geared towards your interests (or a portion of them) and see if you like the vibe, and then (try to) join it. There are some more general instances too, but a lot of them have turned off signups for now because of the exodus from Twitter.

..but it’s sometimes not easy

And that’s why I fired up my own instance - I didn’t really feel like I fit in with the instances I had short-listed enough to want to sign up for an account on any of them. So, I used the one-click installer on DigitalOcean to get my own vanity instance set up. This is a most decidedly user hostile way to go - I found it to be pretty easy but I’ve been running Internet-connected servers for 20+ years; if you’re not a tech folk then this is much less of an option.

However, one thing I have learned is that migrating between instances is pretty easy. There’s just a button and it’ll move your account settings (including followers) to a new account if you want to do that, even across instances. Your history doesn’t move, but it does stay wherever you were. So, that takes some of the anxiety out of the choosing process.

Maybe don’t run your own instance?

I’ve done it and I plan on sticking with it now, but there were a couple of things I’d wish I’d known about it beforehand.

- Visibility: I said earlier that it’s a lot different but it’s even more differenter if you’ve started up your own instance, because they don’t come federated out of the box. In other words, you’re staring a blank page until it knows about other servers. This is pretty quickly resolved - as you follow people, your instance will start gathering toots from their instances - but it’s a little discouraging to begin with. I also worried a bit about whether or not anything I was posting was making it out of my bubble, which it probably wasn’t until I started following people.

- Sizing: The one-click DigitalOcean installer doesn’t give you a sizing guide and neither do the official server docs (or I never saw one, at least) but you probably need to sock more resources at the thing than you may think. I thought I was going to be ok with a 2 vCPU, 4GB, 25GB disk instance and, well, no; it’s fallen over and died a couple times.

- Sidekiq logs to

syslog, which is fine, but Ubuntu 18.04 defaults to rolling that log daily, and it’s by default really really chatty. Like, it fell over the first time because I had 14GB of just/var/log/syslogafter a couple days. I changed the settings to roll the logs every 3GB, and moved the cron runner script to run hourly, and I resized the machine to a 60GB instance to get back into the system. - It ran out of memory! I haven’t actually fixed this yet - I added an 8GB swapfile. But, Mastodon really needs 6GB of memory. It’s holding tight at 5 and change now for my tiny instance.

- So, my recommendation would be at least 2 vCPUs, at least 40GB disk, and 6GB RAM, and make sure you set up object storage (S3 or alike) if you’re going to post a lot of media. Then, make sure you make the logging changes I mentioned. If you don’t want to do any of that, then maybe do 100 GB of disk or more.

- Update: I ended up with a 4 vCPU server, 8GB RAM and 80GB disk, and that seems to be pretty good for now. The CPUs are mostly idle at this point - I added them to help drain the message queue. That took a few hours; my Sidekiq queue was at about 100K jobs backlogged. After some further looking, it appears the DigitalOcean one-click installer installs a pretty old version of the server too (3.1.3 vs. 3.5.3 that’s out now) so now I get to figure out how to update it (on top of the other learning how to admin a Ruby app things). Nothing more fun than performing umpteen point upgrades for something…

- Sidekiq logs to

My instance specifically is as it ships, except I did turn on ElasticSearch for full-text searching, and I’ve added about 7 relays into it. (I think the relays are really the problem with regard to logging. It’s just a lot more jobs that get scheduled into Sidekiq.)

Speaking of relays, I added a handful of relays off of this list to my instance, and now I’m going to clear out a few of them. Relays help shuttle a bunch of toots to your instance, so your federated timeline is more “fleshed out” and your posts get to more places faster, but you should be somewhat judicious about this. Most of them give you a list of instances they know about if you just go to the relay’s root page, and that’s useful for seeing what kind of content will be relayed to you. In my case, I added too many; my federated feed has a lot of just sort of random stuff in it now. (I added a relay in Japan and half of it is Japanese now.) If you’re running a vanity instance like mine, maybe just having a couple relays would be a good idea. You don’t specifically need them.

It’s pretty interesting and fun, though

It doesn’t replace Twitter for me - all the local news stuff, sports-specific stuff, and complaining about service stuff is just about non-existant on Mastodon right now. But, it’s pretty fun to be on and to keep up with. And, it’s a lot slower, so it’s easier to catch up on and then be done with. (And there’s a lot less yelling. I signed up for some of that yelling, but it’s nice to have it in a separate place.) I recommend it - it is a lot more work to use and get into than Twitter, but it’s pretty worth it. I liked it enough to sign up for a blue checkmark the Patreon for the developers.

At some point I’ll swap the Twitter box on the right for a Mastodon box, and at some point I might cross-post things from Mastodon to here, or vice versa, or also to Instagram. (My ever growing cache of cat pictures could use a less Facebooky home.)

If you want to find me on the Mastodon, I’m @james@kachel.social.

Futzing around with XDM

A buddy of mine asked me a weird question the other day: how do I get a remote GUI on a Linux server? As it happens, I knew at least the starting point to this query. It involves using a bunch of stuff that’s built into X Window that’s been there pretty much forever.

Some background

Most people who are familiar with your average Linux distribution are familiar with at least one of the desktop environments that they come with: GNOME, KDE, Xfce, Cinnamon, etc. These provide the UI and a bunch of utilities and other miscellaneous things to allow you to use your machine. Different distributions use different desktop environments, and some distros offer some choice in the desktop enviroment you end up with. (For example, Manjaro Linux has three - you can download a version that uses KDE Plasma, one that uses GNOME, or one that uses Xfce.) But, the desktop environment is only half the equation. All the desktop environments run on top of a separate graphical layer - generally the X Window System, or just X. (Xorg, specifically, which is the current implementation of it.)

X itself is really a protocol - your computer runs an X server, which talks to your keyboard, mouse, and screen; and the programs you run are X clients and talk to the server to display output and capture input. Most of the time, this is all done on your local system, but there’s no requirement for that. X has supported, from its beginnings in the 80s, the ability for the server and clients to be on separate computers and talk over a network. This gives you a lot of flexibility. X in addition has a concept called the Display Manager, which allows you to request a session and log into a machine via a greeter program. This is actually how you log into your average Linux system - there’s one of the various DMs running and it displays your login prompt and gives you some options, and then actually logs you in and starts your session. Display managers also work over the network, so you can have one (or several) large machines that do all the compute and a bunch of smaller machines that the users actually use.

Back in the day, there were actually hardware devices known as X terminals that contained your standard input/output devices and enough compute and network capability to handle incoming X connections. Sun Microsystems made a couple, and there were some NCD machines as well. Nobody really does this with X anymore, though, oddly enough, this general idea and setup persists in the Windows world.

So, in conclusion, X - the actual thing that makes your GUI work on a Linux box - can push all your pixels over the network to a separate machine. It’s been built into the system since it came out, essentially, and literally everything supports it. (To some extent - some things go around the X server and more-or-less write directly to the display hardware. That stuff’s not using X the protocol, though.)

So, the premise

The ask was to be able to bring a more-or-less normal Linux desktop over the network from the server to a local system. Basically, Windows’ Remote Desktop functionality. It turns out this is relatively not terrible to set up, though it is somewhat.. Unixy to use. As I mentioned, the Display Manager handles setting up logging in graphically and setting up the session, and that additionally can work over the network and supports multiple sessions, so that’s where you start. Then, you need to be able to get something to request the session over the network. Finally, you have to figure out how to do at least a bit of security for all this. (Remember how I mentioned that X did this in the 1980s? That wasn’t a joke - X originally came out in 1984 and this functionality was in place then - but the world was very very different at that point, and so it does a lot of things over unencrypted communication channels, which is just really super not good today.)

My thought was to do a couple things:

- Set up the DM on the server machine to accept “remote” connections. (They won’t really be remote connections but by default DMs expect to see just one set of physical input devices attached directly to the machine.)

- Set up a nesting X server on the server machine that’ll request a new session from the DM, and, through the magic of just basically being a proxy for X, display that over the network.

- Get an X server running on the local machine that can be used. (Surprise! WSL 2 has this built in.)

- Finally, use SSH to log into the remote system, run the nested X server, and behold! a graphical desktop enviromment from the remote system on your computer.

Setting up the DM is really pretty easy. Most modern Linux systems use either lightdm or gdm or sddm so you really just have to configure one of those. For lightdm and gdm, it’s just a matter of a few configuration lines added to a file and restarting the service. I found this page in the Arch Linux wiki that has some cut and pasteables. (This is nice because the documentation for lightdm specifically is “read the sample config file”, which is terrible. Do better, folks.) Note that you choose which DM you’re using in step 1 and then do the steps for that one - you don’t have to configure all three options provided, and you shouldn’t because you can only run one DM at a time. I chose to stick with the lightdm one for my initial testing.

Setting up the nesting X server is also pretty easy. There’s two: Xnest and Xephyr. You just find those (search for them in your package manager, probably using their names all in lowercase) and install it. No further configuration. What these do is provide an X server that outputs to another X server - any X clients you point at it basically just get thrown in a buffer that gets displayed elsewhere. Because they’re real X servers, they support display managers and can request a session from one. In my experience, Xephyr works better, especially over a slower connection.

You’ll need something for the nesting X server to talk to, and that is an X server on your local machine. On macOS, you use Xquartz for this. Windows is a bit different. There are some X servers for native Windows, but WSL 2 gained support for running graphical Linux apps in August 2022 - that’s just X so it’s got an X server built in, and you don’t have to do anything. (And, let’s face it, you’re about knee-deep in Unixy stuff at this point so why not just go whole hog and use WSL if you’re using Windows at this point?)

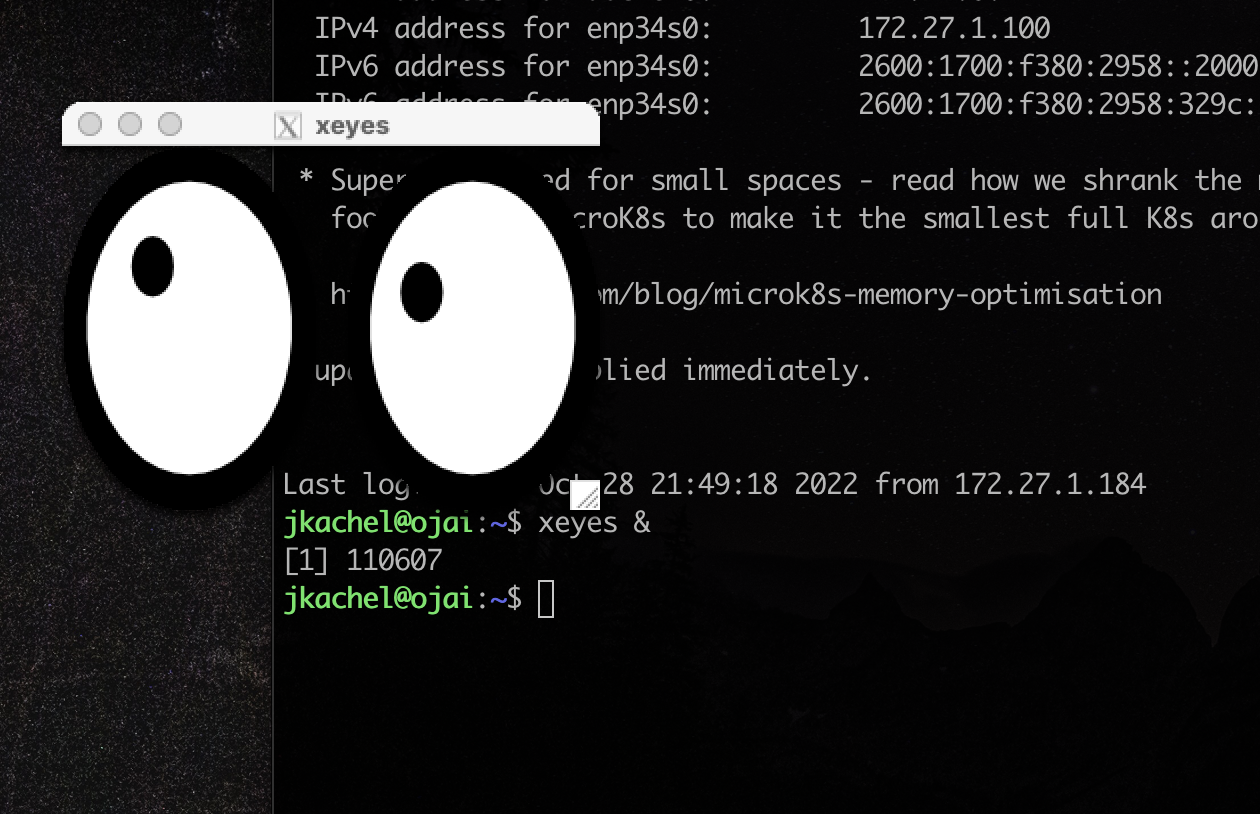

Finally, you need to be able to access the remote system. You just use SSH for this. You may need to enable X11Forwarding on the server side, but you should be able to just use the -X flag when you connect to your machine to automatically forward X11 stuff to your machine. You can test this by SSHing into your remote machine with the -X flag and running something simple (like xeyes, which will put a set of eyes on your desktop that will follow your mouse cursor.)

Fun fact: you can run any X program like this! So, if you don’t want to screw around with the whole desktop environment thing, you can literally just

ssh -Xinto something and run whatever and it should appear alongside your other windows. Like this:

Actually running things

To actually get a desktop, I ran:

Xephyr -query 127.0.0.1 -br -ac -noreset -screen 1280x720 :1

This runs the Xephyr server, tells it to ask for a session from localhost, and set the screen size to 1280x720 (and make this display :1 - it’s important that you don’t reuse these, in that it won’t let you). Once I ran that, it popped a window on my desktop and gave me the normal login screen. Neat!

Other options

It turns out too that lightdm (and probably others, not sure) will just go ahead and set up VNC for you. There’s some instructions here - if this is set up, you can use a standard VNC server to connect. (macOS has one built in since that’s how its own screen sharing stuff works, and this isn’t hard to come by everywhere else.) The second time I set this up tonight - yes, I did it twice, it really didn’t take long - I ran into some issues with the XDMCP method but for some reason turning on the VNC server worked just fine. Go figure.

So, here’s a neat thing you can do with some crusty old Unix stuff that is amazingly still very well supported today.

A comparison of two laptops

Full disclosure: this is more or less going to be however many words of me hating on my MacBook Pro. Just so you know.

I have a 2019 13” MacBook Pro system, the base two USB-C port model with slightly upgraded storage (256GB from the base 128GB). I bought this system to do software development and as a general purpose daily driver to replace the hodgepodge of machines I was using so I could be a more effective software developer on a project I was working on. The choice to get this particular model machine came down to cost, capabilities over the similar tier MacBook Air model, and desire to have a macOS-based system (as my dev toolchain works best on a Unix-y system). This was a $1,500 system when I bought it, after my educator discount and fortuitous timing to get it during a tax-free weekend.

This thing was a mistake. (This is the bit where I just complain about this dumb computer.) On paper, it seems pretty nice for the time, but there’s problems. The 8th generation Core i5 CPU in there was getting old, even in 2019, and it doesn’t help that it’s a laptop efficiency SKU so base clock on it is 1.4GHz (and no HyperThreading). The 256GB storage is far more limiting than it seemed it would be. The keyboard, well.. this one’s got the 3rd generation butterfly switches, so it doesn’t fail if you look at it wrong or happen to not live in a clean room, but the switch feel is terrible. Some of this I tried to remedy - really, this thing mostly lived as a desktop, so I’ve amassed a bunch of USB-C dongle docks and one quite fancy CalDigit one, and so I had additional storage hooked in and screens and a real keyboard - but then you have to deal with macOS getting confused by there being things attached and then generally sometimes forgetting that there are screens, getting stuck in beachball mode, etc. All the while, WSL kept getting better, and PC parts kept getting cheaper, so ultimately I ended up building (now a handful of times - fiddling with things is fun!) a desktop system that’s now my daily driver and the Mac rarely gets used anymore. I didn’t even miss it when it had to spend a week or so out at AppleCare for service. (The keyboard broke. But not in the way everyone’s did - my backlight gave up. So, it’s got a new keyboard now. And a new screen, because the shitty webcam also failed. First time I’ve sent a machine in for service in maybe a decade.)

Now, the easy fix for this is one you can do on the front-end: don’t buy the base model. I thought 8GB RAM, especially coupled with Apple’s “fast” SSDs, would be OK, but really with the stuff I tend to need to have running (which includes Docker, IDEs, all that) it doesn’t work out. I thought too that I could make do with 256GB but that just meant I was running the SSD close to full all the time, which is bad. And sorry 1.4GHz Core i5 sucks. Just does. Almost as much as the keyboard. So, why didn’t I get the 4-port model instead? In this case, because the cost went up dramatically - I got this thing during a tax-free weekend, so not only would stepping up mean going to the $1,800 system or so, it’d also mean paying another roughly 10% more in sales tax, so really instead of a $300 difference, it’s closer to $600. (In Tennessee, tax-free weekends cover computer purchases up to $1,500 as long as they’re entirely under that amount. Go a penny over and the entire purchase price becomes taxable again.) Plus, the obvious - I had the money for that machine, and not more than that. So, I dealt with it and as things went on built up my daily driver desktop PC into the fairly ridiculous system it is.

Laptops are nice to have and it’s also nice to be able to do stuff from not my desk with the questionable ergonomics, so I’ve been looking and thinking about getting a new system. I’m also now mostly a Windows user again - Docker and WSL 2 especially make things a lot nicer for the things I need to do - so I’ve been considering those things too. I’d mostly thought on a Dell XPS 13” or HP Spectre x360 13”, as I like the form factor of the smaller machine. Either of those would run about $1,500 - of those, the Spectre was the winner since Dell likes to run warranty scams and it just had better specs for less money. But, I saw that Best Buy actually had a Lenovo system for pretty cheap that had pretty nice specs. Poor impulse control said I could get it, so I did.

The machine I got is a Lenovo Yoga 6, which I suppose is nominally part of their ideapad line, so not a fancy ThinkPad system. It’s a 13.3” machine again, occupying the same 2d space as the MacBook Pro but somewhat thicker (maybe about 33%? Both of these machines are super thin) largely due to its convertible nature (has touchscreen, folds backwards). The screen isn’t quite as nice as the Mac’s - just a 1080p panel versus the sort of 2.5k that the Mac has that you can’t actually run at native resolution - but it’s close enough. The trackpad isn’t as nice but it’s worth noting that Windows Precision trackpads are leaps and bounds better than what used to come on Windows laptops. (Again, it’s close enough.) Port selection is way, way better - 2 USB-Cs and 2 USB-As, though only one of the USB-Cs supports PD, and no headphone jack on the Lenovo. The keyboard is so much better and mine’s even slightly broken - the LEft Shift Key Sticks Occasionally - but the layout is comparable to the Mac and is even backlit. No touch bar, which I prefer but I wasn’t a touch bar hater. (Escape is still roughly in the same place either way but tactile feedback is nice, but on that same token, the touch bar does some neat things too that I sort of miss a bit.) Has a fingerprint reader! The webcam sucks - it’s a 720p thing, but it works, I guess - but as a nice touch it has a physical shutter you can activate. Mine came in a nice darkish blue with a denim cover on the screen, which I like a lot. Needless to say, this is a plastic machine, but it’s nice plastic.

The guts are where the thing really stands out. Even adjusting for time, it’s way better. The Lenovo came with an AMD Ryzen 7 5700 CPU, 16GB of RAM, and a 512GB SSD that’s actually upgradable. This means it’s got a CPU that’s pretty comparable to my desktop and better graphics than most anything Intel has, especially the UHD stuff that’s in the 8th gen. (Prob worth noting that despite the name the laptop 5700 Ryzen is internally a Zen 2 CPU, so it is really the baby laptop-style sibling of my main machine’s Ryzen 7 3700X. Same architecture, same 16 threads, but clocks and TDP are different, and the laptop CPU’s got graphics where the desktop one does not.) 16GB RAM is good too, especially since that isn’t upgradable, and though it’s limited to PCI-Express 3.0 I can swap in a bigger SSD easily. The SSD itself is a Western Digital Black model, even, that I believe benchmarks faster than the one in the Mac too. (I can feel the difference between it and the ridiculous Sabrent Rocket PCIe 4.0 one in the desktop but that’s a ridiculous drive.)

Price comparisons are a fun thing to do, so let’s do that. I looked on the Internet’s favorite auction and corporate intimidation site, eBay, and found that my MacBook Pro can be had for around the $600-$700 mark at this point. Which is handy, because the Lenovo was $700. Yep. Granted, I saw this system on Best Buy via the Ars Technical deals thing, so it was on sale - normally it’s $950.

Basically, this all made me somewhat more annoyed than I was with the Mac before - if I stripped out the macOS requirement when I was looking initially, I’d have ended up with a much better laptop, and now, barely 2 years later, I spent about half the price of the Mac and got easily way more machine. Oh well. At least the USB-C dongles and such are still useful - while the Lenovo lacks Thunderbolt (it’s an AMD machine), it’s still got a couple of whatever the fast USB C ports are so I can still use ‘em. (And part of my annoyance was/is those things - I have like 3 of the dongle dock things, and the CalDigit one wasn’t cheap.)

So, moral of the story: don’t buy the base model, and, yeah, look at the damned Windows machines. I’d have been happier with either a contemporary Dell XPS or HP Spectre/Envy or something or by saving a bit more and getting one of the 4-port MacBook Pros. (Or even the Air, really - 512GB storage would be better. Or being able to upgrade the storage. Damned Apple SSDs aren’t even that fast. They’re not magic.) I am, though, real impressed with this cheap Lenovo.

Other thoughts: Battery life is still pretty great - I get a good 8 hours at least depending on what I’m doing. Lenovo gives you a year warranty and adding on up to 4 years is pretty cheap - something like $200ish to go up to 4 total years with on-site support; less if you’re good with shipping it out. Mine didn’t have too much bloat crap on it, just a handful of annoying Lenovo apps and McAfee (or the ghost thereof), all of which (other than a couple of Lenovo things to make the support site work) went away when I reformatted and installed Windows 10 Pro. I can’t unlock it with my watch, but then most of the time I unlocked the Mac with the Touch ID sensor because the watch unlock is really slow. The convertible bit is pretty nice, though I won’t use it much. Still nice to have and because of poor impulse control I did get a Wacom Bamboo pen for it, which works pretty well (at least as good as my ancient Surface Pro 4 pen). I have in fact used it a few times in the short time I’ve had it so far. And man the keyboard. It’s like it was designed by people who actually use their computers for things and who might want to type on them occasionally. Nice travel, nice tactile bump, and this isn’t even the nicer ThinkPad keyboard. Being able to actually do things from the couch is nice.

Refurbing a PowerBook G4

So, I have this PowerBook G4 that’s been sitting on a shelf for a while. It’s not a terribly interesting model - just a regular 15” one, second-to-last generation, that’s basically stock except for a slightly upgraded hard drive (faster, but not any more spacious). I believe I originally got the machine maybe 12-13 years ago as a “it’s gonna get thrown out otherwise” deal from one of the various jobs I’ve had, just as an extra machine to do things with. (It’s old enough that Intel machines were out by this point, and this was even then skirting the edge of usefulness as a PowerPC Mac.) At some point, it got given to a friend to use for audio stuff, and then ended up back in my hands a year or two ago, where it’s sat since then. I think I tried to power it up once and it really wasn’t having it, so it got turned back off and shelved.

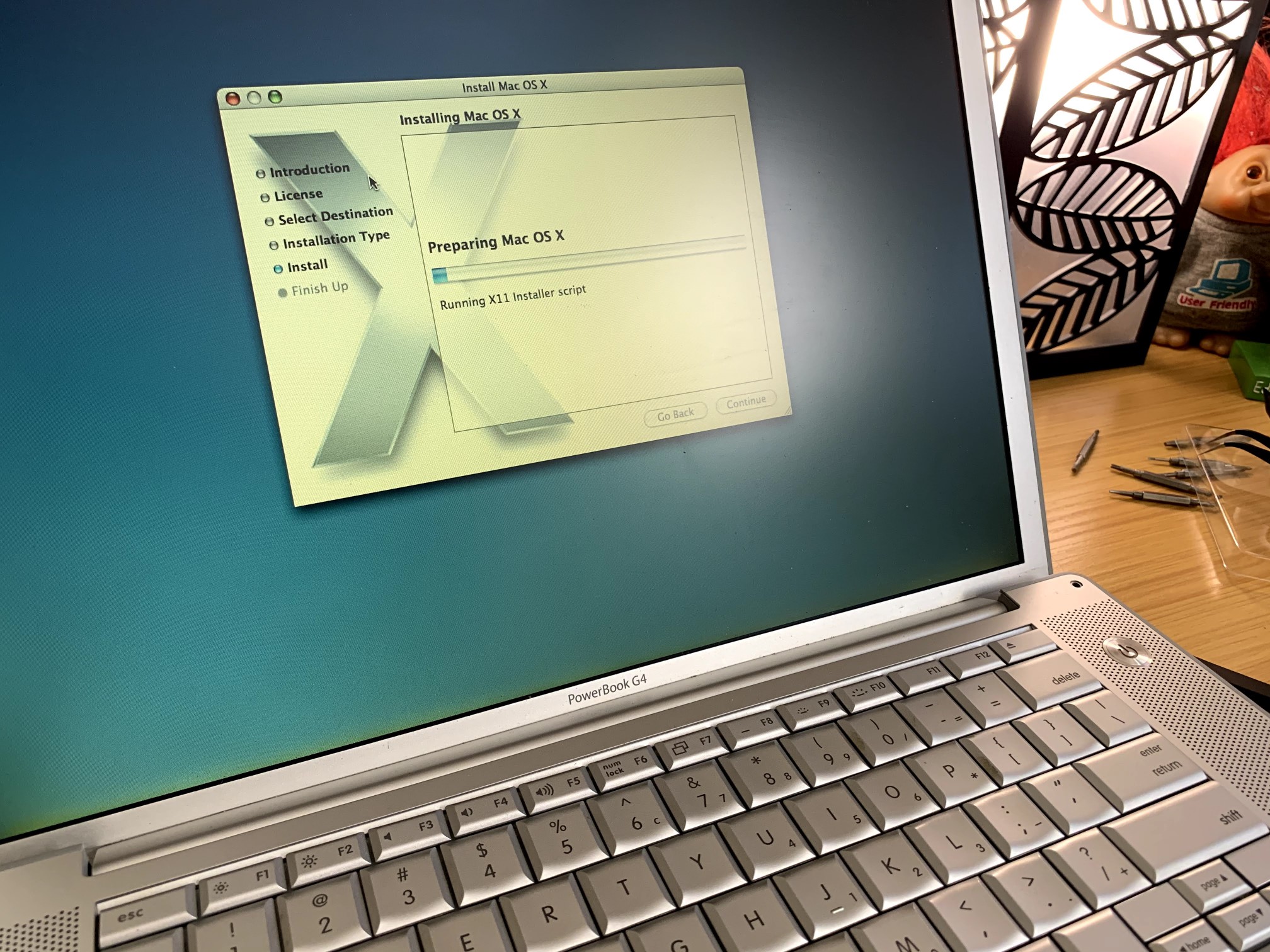

I ran across the thing the other day while doing some reorganizing and decided, why not see if this thing works? So, I pulled a power adapter (fortunately I actually have a couple!) and gave it a shot. Lo and behold, it actually did power back up and at least tried to boot! This was pretty surprising. It didn’t boot successfully, but it at least got to a point where I could start it in verbose mode, so that was nice. A burned Tiger DVD later and.. yeah, the hard drive gave up the ghost. I happened to be watching one of the myriad YouTube channels about retro computing, and ended up watching a video about putting a mSATA SSD into a Titanium Powerbook G4. Fortunately, it also listed the specific parts necessary for this, so I headed to Amazon and made a quick purchase. (You do have to be a bit careful with the SSDs - chips to convert SATA to old-style parallel ATA are pretty easy to come by, as are enclosures that give you that plus an m.2 slot for an actual drive. But, the drive itself needs to support mSATA specifically - a sufficiently fancy NVMe one may not. So, it was nice to have specifically an enclosure and drive pair that worked, and worked in a PowerBook G4 specifically, even if it was a much older one.) Once all that arrived, I pulled the machine apart (which is simply a case of “take out all the screws, use a bit of prying, hope you remember where the screws went”) and swapped out the now-dead hard drive with the assembled mSATA enclosure. Reassembled and hey! now I have an actually working PowerBook G4 running Tiger.

As a side note: it’s pretty impressive how much faster even a really cheap, probably somewhat iffy SSD is over a spinning rust disk.

With Tiger installed, I put a couple of older games on there and a few useful things, like TenFourFox (a modern version of Firefox compiled to work on old versions of Mac OS X and with old PowerPC processors). The next step was getting Linux on it, because of course. I tried Adelie Linux, which still produces a PowerPC version (and also because the channel that did the TiBook upgrade has a video on putting that on a G3 iMac) but it seemed like too much work. (There’s no installer, so you’re basically bootstrapping it via chroot by hand. The “too much work” thing.. well, that’s a pretty dubious line.) So, it got Debian Linux 8, which does have a proper installer. It works pretty well! Obviously, with some caveats, given the age of the CPU in it and the limitations of the hardware in general, but Firefox seemed to work OK. Really, the biggest pain was resizing the existing Tiger install - mainly because that OS is old enough that it doesn’t understand resizing partitions, so I had to clone it to a flash drive (which it can’t boot from, because Open Firmware), repartition the drive, then clone it back, and oh did I mention that this machine just has USB 2.0? That took awhile. All in all, though, it did work, and now it will dual-boot into Debian and Tiger.

Now, because I am a terrible nerd, I also recalled that OS X used to ship with X11. Not installed by default, but it was on the DVD, so that got installed too. Now I can do stupid Unix tricks with the much, much, much faster Core i5-4570 Ubuntu system sitting headless on a shelf behind it. Unfortunately, the version of X that comes with Tiger is rather old, so it’s not particularly happy working with modern things (and modern things aren’t particularly forgiving of this, and therefore crash). There is, of course, more than one way to do this.. so a download of Xcode 2.5 was initiated, and, lo and behold, MacPorts actually still supports OSes all the way back to Tiger. With Xcode installed, I went ahead and installed MacPorts as well. Some hours later, I have now a working MacPorts setup on here.

Another aside: I also installed WebObjects. Ask your elders. I have a boxed copy of this on my shelf, so printed manuals that might not be too horribly out of date with the copy that’s installed on here. Maybe I’ll do something with it!

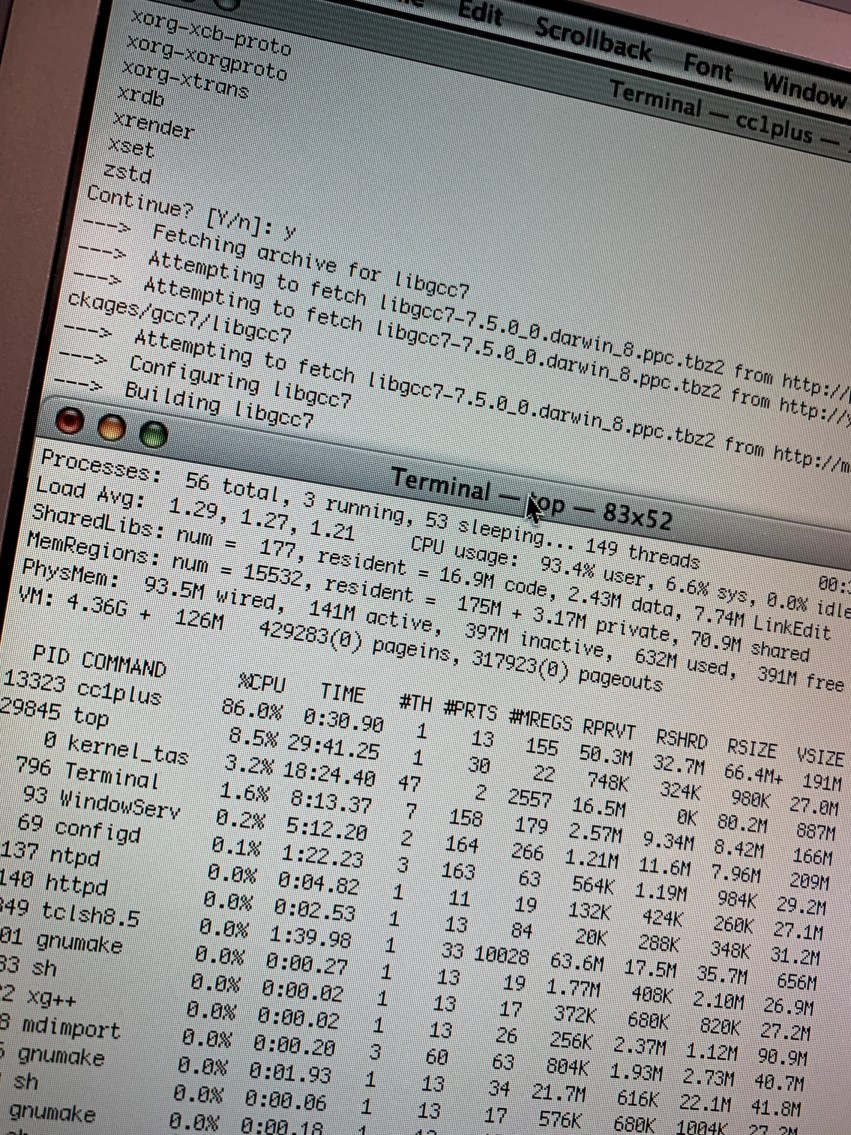

The MacPorts setup on older platforms like this basically works by replacing all the development tools with its own toolchain. So, while you need Xcode installed so it can at least bootstrap itself, it’ll install its own copy of gcc and all that so it can bring up whatever package you’re trying to install. (This is basically a BSD ports-style distribution, after all - it’s less a package manager like apt and more a build script manager. Everything is compiled, except where it’s not a compiled language or whatever.)

So, “sudo port install xorg-server” was run. And that’s where this story ends for now, because that was.. quite literally 12 hours ago. It’s not done yet. It’s still compiling libgcc. It stopped in the middle, even, because it required manual intervention (literally, running a command, but still) to disable a library so it could continue building things successfully. Perhaps it will be done in the morning, but I doubt it; there were a lot of packages that needed to be done up and just the bzip2 install alone took an hour or so.

Needless to say, the takeaway so far is: 1.5GHz G4s were really fast in 2005. They aren’t so much now. Bootstrapping a modern compiler toolchain on such a machine - even one with an SSD - takes a while, because there’s only one core, because that wasn’t a thing yet. (Not in consumer applications, anyway.) On the plus side, I know this machine definitely works fine, because the load average on it has sat at 1.3 since this process started.

I do have some more stuff to do with it - it’s only got 1GB RAM, so I’ve got another DIMM on the way - but really this will be around to play old games on and maybe fiddle with old developer tools and stuff. And stupid Unix tricks.

Final aside: because old games, I did have to get Mac OS 9 installed on it. Problem: this machine is too new to boot OS 9. (It being a PowerPC means it runs in Classic on this system.) So, I had to figure out how to install OS 9 via Classic without the restore CDs, which would have included a disk image Classic environment to work with. I did figure that out! So it actually, technically, runs 3 OSes - OS 9, OS X 10.4.11, and Debian Linux 8. (And maybe Leopard if I can find my DVD of it. I don’t know that I want to buy a spindle of dual-layer DVD-Rs.)

Quick actual nerd thing!

The other day, I shipped my MacBook Pro off to be fixed, as the webcam and the backlight on the keyboard stopped working. (They broke some time ago, but it’s coming up on the end of the standard warranty, so I figured I’d get that done before it cost actual money to fix.) This post isn’t really about that computer, though; it’s much more about something that I managed to cobble together to take care of a thing I was doing on it. This post is much more about Platypus.

So, while I do still use my Macs a good bit, they don’t get used a whole lot for real work. (The ridiculous Ryzen machine takes care of that for the most part.) The Mac gets used for Web terminal stuff, email - Outlook on Mac is better than Outlook on Windows, because unified inbox and it actually checks gmail accounts on a regular basis why is this so freaking difficult - and messaging via Messages, so basically text messaging. With the new machine in for service, I set up my 2010 MacBook machine to handle the handful of Mac-type things I can’t reasonably do on the Windows PC. It works well enough for its age but it’s not the quickest thing to do Web browsing on, and I was getting somewhat annoyed anyway with having to switch machines to send Messages (or otherwise having to do all that on my phone).

To that end, I set up the MacBook as sort of a third screen next to the Ryzen machine’s monitors and put Synergy on it. Synergy still works really well, and I can control the MacBook from the Ryzen box. Then I got thinking on getting it set up to open links and stuff on the Ryzen system, rather than trying to do it on the MacBook itself. I did some poking around and found some manager apps that give you a lot more options on the Mac side - there’s Choosy and Finicky and a few other things - and I found a page talking about using the Chrome remote debugger, which has now been deprecated, and I had an epiphany: can’t I open a URL on the command line? And, well, yes - on either Mac or Windows, running a command will allow you to open arbitrary things through the GUI (on Mac you use “open”, on Windows you use “start”). So, “start http://www.google.com" opens a new tab in Chrome and loads that URL.

So now I just needed a way to get URLs across the wire from the Mac to Windows. The Windows side was actually pretty easy: I just wrote a (very) simple PHP script that looks for a “url” GET argument and then pipes that into an exec call. This is wildly insecure but it does at least check for a valid remote IP and it’d be pretty easy to put in a simple URL regex check in there or to add some simple request signing (and of course everything’s behind a firewall anyway), but, after firing it up with the built-in PHP server, I could hit a URL with another URL in it and it’d open on the Windows machine.

The Mac side was a bit more involved. I wrote a simple shell script to pump the first argument passed to it into curl, so curl’s hitting my fancy endpoint ont he Windows side of things, and so I could open URLs remotely via the command line that way. The next step was figuring out how to get macOS to treat it as a “Web browser” and therefore “open” links using it. That’s where Platypus comes in.

Platypus is a pretty neat utility that I had no idea existed until today. At its core, it allows you to wrap a script (including a shell script) in a standard Mac app bundle, so that to the system it looks and works mostly like a normal app. It’s also got a bunch of neat features to handle script output and do things like load bits into a WebView (not like an app-specific browser, though), or display progress bars, or etc. etc. etc. The only drawback to it nowadays is that modern macOS really, really wants your apps to be signed, and it doesn’t do that, so if you’re on a modern system you’ll have to jump through some hoops to get that to work. But, it’s a pretty versatile utility that’s available for free.

I packaged the shell script up using Platypus. That worked about as well as it could have - I didn’t make any attempt, really, to get it to do anything but launch and run the script, and it did that (and, somewhat hilariously, it kept blocking because on the Windows side it was spawning a command prompt, which I had to close before PHP would consider itself done and then also return data back to curl on the Mac). The next step was to figure out how to get the thing registered as a Web browser system-wide and hope that macOS passed the target URL into it in a sane way.

Registering an app as a Web browser requires a couple of things: the app’s Info.plist needs a couple of key/value pairs to tell the system that it can handle http and https links, and the app needs to be in the system Applications folder. Platypus doesn’t have any controls in it to edit the default app Info.plist, so a bit of poking about was needed to edit it. (The gist of it: on the command line, I just used the plutil -convert command to convert the plist to XML format, then made some chanages, and then same thing to convert it back to binary format.) The necessary values that had to go into the plist itself I found in this Stack Overflow post, and, once I had those in and move the app bundle to /Applications, it showed up in the Default Web Browser dropdown in System Preferences. Selecting it, then opening a URL.. actually worked. (Not the first time as.. well, that blocking issue? I’d managed to trigger that again, and had a couple of requests queued with no URL in there. So, once I cleared out the command prompt windows, I got a barrage of things I’d clicked on.) So, now I can click on URLs on the Mac and they’ll open in Chrome on my Ryzen machine.

Now, the next steps are to make this somewhat more secure. Platypus can run pretty much any script - it just, really, runs it, so it can be in any language; it just happened that shell script was the most straightforward for now. So, I’d like to switch it up a bit so that I can maybe accept an SSH key or something and do this that way, so it’s not just piping things in cleartext over the network and has at least some form of authentication. It’d also be great if the thing could figure out if nothing’s listening on that particular IP and port so that it can revert back to a local web browser - if I grab the machine and go elsewhere, I’ll have to remember to switch it back over to Chrome or I won’t be able to click links outside the browser, and that’ll be annoying. Then, maybe I can release it on Github or something.

Is this the easiest way to do this? Oh god no - the easiest way is probably just to keep using my phone for this stuff. And, as mentioned, I have Synergy set up between these systems - it syncs the clipboard, so I can right-click and copy links and paste them into Chrome (in either direction) that way too. But, this was a fun 30 minute distraction that honestly makes things a lot nicer. (And, as a note, I’ve spent easily two to three times as much time writing this post about all this - and it was supposed to be short, even! - than I did actually doing the thing. I don’t even want to calculate the difference in length here with code written as it’s quite literally less than 10 new lines of code so far.)

So, just a.. well, not quick post about this neat thing. Stay tuned for the next post, in which I will invariably do something stupid with an old computer!

(Updated shortly after posting: Platypus actually does have an interface in it to register URI handlers for your generated app. I missed that somehow, so you can probably use that instead of mucking about with the Info.plist directly. Whoops!)

Cheap computer = go

So, at the beginning of the lockdown situation I had a go at refurbishing an old corporate desktop class machine for use by a friend. This thing was an Optiplex 790 or some such tomfoolery, which was a first-generation Core i7 machine in the small form factor case (closer to a Mac mini in size, low-profile PCIe slots, etc.). It actually impressed me when it was done - it was a perfectly capable lightweight computing machine. My refurbishing extended to all of fitting 16GB RAM to the thing (it had none at the time) and putting Windows 10 on it (which activated with the 7 license it had - corporate desktop!), and even with the spinning disk inside as a boot drive, it’s perfectly fine even for some rather older games, and handles basic tasks like web browsing and Office perfectly well. I did hold off on replacing the hard drive with an SSD, though - some quick looking showed that the price of doing that (even with how cheap SSDs are now) wouldn’t have been a good idea.

The looking did give me ideas, though, as to what you can get for not much money. The dumb-no-longer-dumb-but-real-PC really started up as an experiment to see how cheaply you could build a decent PC, and that got pretty cheap but some more eBay searching and benchmarking indicated that you could do, perhaps, a bit better. So, I started looking at some of these business PCs, specifically the small form factor ones, and basically came up with this: for between $100 and $150, you can get a 3rd or 4th-gen Core i3/i5 system with some memory (generally about 8GB) and a spinning disk drive (usually around 500GB, though sometimes bigger or sometimes SSD), and it will likely have Windows 10 on it. That’s a complete computer (unless you want to get a bit tetchy about it not having a keyboard, mouse or monitor). To put that into perspective, the new build involved a Pentium Gold CPU for about $70, and about another $50 on the motherboard, and that doesn’t include even RAM or storage.

Prices spike after the 4th gen CPUs at this point in time, and systems with i7s in them come at a premium as well that I’m not entirely sure is worth the additional cost or jump down to a 2nd-gen CPU. (I’d rather have a i5-4560 rather than a i7-2660, personally.) You’re also not going to get a real GPU most of the time with these - onboard or bust - and if you get an SSD, it’s going to be a 120GB one. (I did look, and while I was more after a SFF system, a regular midtower doesn’t incur a price premium, other than maybe additional shipping cost.) But, that’s still a complete system, with a licensed and activated copy of Windows 10, for about $150. And, that’s a fair amount of computer too - it’s not going to be the thing you want to depend on to do your 4k renders for YouTube, but for most anything else it’ll be perfectly acceptable. Even some software development stuff shouldn’t be too hard to do on a machine like this.

I did end up putting a bit of money where my mouth is on this one, and I ended up with an HP ProDesk 600 G1 SFF machine. Spec-wise, this thing came with 8GB DDR3, a 500GB spinning disk (with two! empty bays and matching SATA ports for each, one of which a 2.5” one), a DVD-DL RW drive, a Core i5-4570 CPU (3.2GHz, burst to 3.6), and Windows 10 Pro. Also handy: 3 PCIe slots (one 16x and two 1x), DisplayPort, and USB 3 ports. And a serial port. Because business. It’s also a vPro capable system, so it even has some rudimentary LOM stuff on board and ready to be an attack vector because Intel has problems with that. I’m going to drop a 240GB or so SSD in it (those run about $30 these days) and another 8GB RAM to.. bring it half to max - did I also mention it’s got 4 RAM slots and supports up to 32GB RAM? No? Well, it does - and then basically leave it alone. The spinning disk in there hurts performance a good deal, as they always do, but it’s a pretty decent machine otherwise, and it did for sure boot into an activated install of Windows 10 Pro. With shipping costs, this ran me a total of $105.

I like the way the Dell SFFs are done up - they just seem really solid and.. dense, really, which is nice - but I actually think I prefer the HP layout. It’s a bit bigger than the Optiplex SFFs but all of the bays are on a swing-out bracket, and there was even spare mounting screws included (in a designated spot, from the factory) for adding additional drives. There’s a 3.5” and a slim 5.25” external bay, and a 2.5” and 3.5” internal bay each. So, with some additional commodity mounting brackets, you could slot a total of 3 SSDs in here and have a pretty decent little storage server. Expandability is pretty nice on here - it of course uses low-profile cards, as do most any SFF-style machine, but three total slots is pretty good, and there’s a good number of USB 3 ports on the front and back. (And, of course, vPro management stuff and the one somewhat neat thing that tends to get glossed over: the internal speaker’s hooked to the sound card, so you have actual audio without having to hook up speakers. Not good audio, but not just the 1981-style PC beeper either.) One big drawback here, though, is that the power supply is totally nonstandard; sometimes these things will have an SFX or TFX power supply, which is at least pin-compatible with ATX, but in a weird size. This doesn’t; the power going to the motherboard is totally weird. There’s like 3 different, beefy 6-pin connectors that would be at home in an Amiga. It’s a good PSU in there, but if it goes out, the machine is unlikely to be salvageable. Still, that’s a lot of good machine and options for the $105.

Now, am I going to use this as a desktop PC? Of course not. It’ll work fine for that purpose, but that’s not really why I wanted to get one. I also got a 4-port GigE card (also HP, with an Intel chipset) that uses a PCIe x4 slot, and that’s been added to it. In essence, this is going to become a router. Commodity WiFi routers cost about this same amount, but not ones that can be loaded down with Proxmox (and/or maybe Kubernetes) and pfSesne. (And obviously WiFi routers have WiFi, and this doesn’t. But, I’m just going to keep using my cheap TP-Link router, and just make it not do routing anymore. It’ll just have to manage WiFi and the what-passes-for-mesh networking I have.) So, for $100, another $30 or so for the NIC (you don’t have to get a quad-port one, but they all run about the same price via the eBay) and about another $60 in some upgrades I don’t really have to do, I’ll have a pretty decent home router and edge server. And it’ll be way easier to do WireGuard up to real cloud stuff too. And I can maybe finally use the IPv6 block I’ve had forever. And VLANs, because what every home network needs is 4 more networks laid on top of it!

In conclusion, if you need a decent amount of computing power, but don’t have or don’t want to spend a bunch of cash on a new build.. old business desktops are a thing to check out. $125ish for a machine that you can then slap an SSD and some sort of half-height video card into, and play some games or get some work done is a great deal - it’s excessively hard to compete with that by building your own from scratch.

At the end of Pinnochio, the puppet becomes real

Or, the continuing saga of the original “dumb PC”!

The “dumb PC” has seen a lot of changing roles and changing uses since I set it up. Originally, its purpose in life was “I have this case, what’s about the cheapest PC I can build into it?” and part of that goal was to re-use some spare parts I had from refurbishing and upgrading an HP all-in-one I managed to take home. Ultimately, building a system with even used parts around an old Athlon II X2 CPU was pretty cost prohibitive, and I ended up putting together a pretty cheap 9th-gen Intel Core system. Doing that gave way to trying to use a Linux desktop for the first time in.. well, decades, which went well, but, yano, games, so Windows 10 it was, and it got a fairly decent video card. It ended up becoming my main system, especially after the pandemic hit and working from home was the order, and more upgrades ensued until it ended up moving from an 8GB/240GB machine to a 16GB system with a 500GB M.2 SSD and a 1TB SATA SSD. It’s got both my 4k screens on it, and I even got it a cheapish webcam (once demand settled a bit) that actually works pretty well and support Windows Hello.

At this point, the machine really is my main system - all of my main job stuff is done there, most of the screwing around I do is done there, games are on there (when I have time and interest in playing them), etc. But, one of the things I started to run into was a lack of processor. This was supposed to be a cheap machine and as such it’s got a cheap processor in it - a Pentium Gold G5400, which is based on the 9th generation Core architecture, but is intended for budget systems and appliance use more than anything. It’s only got 2 cores, and does do HyperThreading, but my workflow now depends on virtual machines and Docker and all that more and more and that’s a bit much for that CPU. So, I decided it was time to bite the bullet and move from “let’s build a PC for cheap, it’ll be fun!” to “yep let’s build a pretty serious system.”

The one thing I didn’t do is actually stick with Intel. The 9th-gen Cores are great and all, and I’ve had an eye on a i5-9600 that’d swap right into the board, but it bothered me that I’d then have this basically new, current CPU floating around not doing anything, and it’s both too modern and too budget to really get anything out of it on the used market, so I’d want to also get another motherboard to slot it into and use for, I dunno, something. (Plus with previous upgrades I did have 8GB of DDR4 and the original 240gb SSD not doing anything.) But, looking at prices for the motherboard plus CPU kinda put me off. And, I kept seeing things on YouTube and whatnot talking about the Ryzen CPUs, and I hadn’t had a really good AMD system in a while.. so that’s what I went with: a Ryzen 7 3700x. That’s an 8 core/16 thread CPU for about the same money as the i5 (6 core/12 thread). On top of that, the new B550/X570 chipsets also just came out, so why not do all the new things?

As a quick aside, one of the first real modern systems (or maybe the first real modern system) I built was a 1.2GHz Athlon Thunderbird. It’s been a long, long time - arguably since the Pentium 4/Athlon XP Barton days - since AMD was not only a power player but legitimately better than Intel. Processors sure are ridiculous these days but it’s nice to kind of revisit those days of building systems and playing Unreal endlessly, and loading up games because it’s just so damned weird to have things that look so good. (And if you want to go back further.. we had a 386DX-40 system back in the halcyon days of Windows 3.1, CPU lawsuits, and the very early Internet. Me and AMD go back a ways, and those DX-40s were fast as hell and bulletproof in the day.)

Anywho, so aside from the processor, I also ended up with an ASUS Prime B550 motherboard to slot it into. The nice thing about the B550 is that it’s PCI-Express 4 capable now, and will support the new Zen 3 processors when those come out too. (So in 6 months or whatever, my VM infrastructure will become properly ridiculous.) It’s also got two PCI-Express M.2 SSD slots, one that maxes out at 2280 and one that goes up to 22110 or whatever the longer one is. And better I/O - the Intel board I have (also an ASUS Prime) has like 4 on-board USB ports total; this one has 6, at least, 4 of them USB 3.1 and two 3.2s on top of that. The one thing that kinda sucks about it over the other B550 boards I was looking at is that the LAN port is only 1Gb where other ones have 2.5Gb Ethernet. But on that same token I’m totally not putting the money down yet for 10GBaseT infrastructure so not gonna worry too hard on that.

I threw that all together and.. holy crap Docker starts so fast now. I did a test Windows install to the 240GB SSD and kept getting up while it was doing its thing, to come back 20 seconds later to a reboot, and then confusion because it wasn’t erroring out, it just blew through the install steps that quickly. I did run a Cinebench R20 test, and it benches about 6 times faster. The NVMe stuff is also vastly improved - somehow or another, my drive was running at PCI-E 2.0 with just 2 lanes, where it supports PCI-E 3 x4. (Why this was I don’t know.) I haven’t run games or 3DMark yet, but I don’t expect a huge increase there; I’m still on the older RX 570 GPU and I have a hard time justifying that expense. That said, it’s definitely snappier, which alone is an accomplishment - things are just so damned fast nowadays that it’s hard to really get a machine that feels faster, but this is a move that does it. To be sure, this was a pretty massive upgrade - it’s 4 times the cores and threads, and on an architecture that’s perhaps a bit faster than the Coffee Lake stuff now - but when you factor in the cost, it’s pretty impressive.

So, loads of SSD, a video card I’m pretty happy with, and a real fast processor. This thing is a pretty properly good computer now. The current final specs on it:

- AMD Ryzen 7 3700x 8-core/16-thread unlocked CPU (3.6GHz factory)

- ASUS PRIME B550M-A/CSM B550 chipset motherboard

- 16GB Crucial 2666MHz DDR4 RAM (2x8GB)

- AMD Radeon RX 570XT GPU (6GB I think? Maybe 3)

- 500GB Mushkin M.2 NVMe SSD (PCI-E 3.0 x4)

- 1TB Patriot Burst SATA SSD

I’m pretty happy with it. So, I’m done now, right? Well, of course not! I am a terrible nerd after all, and there are slots and things that aren’t being used.

The first thing in line is the case and PSU - the Rosewill case it’s in is cheap and I’ve cut myself each time I’ve had to work in it, and it’s cramped and yeah basically no cable management. Airflow is OK given the case fans and mesh front but cable routing is a pain. And, the RAIDMAX 500 watt PSU that’s in it now makes odd noises sometimes. (The fan’s dying.) I’m not terribly worried about power consumption - the UPS that runs the whole nine yards there, including the screens, the MacBook Pro, its screens, and the dock/charger, all together use about 300wt - but bad fan is no bueno, and I want a modular PSU anyway. So, probably going with a Thermaltake or Fractal Design case and some sort of real PSU (Tt, Corsair, or Silverstone probably) for the next round. I have like maybe 1cm of clearance between the GPU fans and the front panel cabling/bottom of the case on the current one so this is now pretty needful.

Next up is RAM. 16GB good, 32GB better, RAM is cheap. 4x8GB DDR4-3200 or whatever runs like $130, and that board has 4 slots for a maximum of either 64 or 128GB. NVMe SSDs are cool but actual RAM is better. Speaking of, I’d also love to jettison the SATA SSD and drop in a NVMe 1TB one (or two!) in there. Even those are pretty inexpensive - I won’t get a Samsung 970EVO PRO for $100ish but the WD Black drives are pretty damned good too. (I believe I get RAID on the 3700x CPU too so a RAID 0 of two NVMe drives sounds like I need a moist towelette.)

I’d like to do up a faster video card but until case and power supply (and probably RAM) I’d rather not. It’s just too cramped in there as is. And I kinda want an RTX card (yes, for Minecraft), and those are still way more than I want to pay.

So, not but a few months in, and the fun plaything modern PC turned into my main machine, then turned into a real boy. I mean computer. And, I’ve already repurposed the Intel stuff into a new machine - the new and improved sinbox! So many weird computing projects.